An unforgettable meetup experience with the perfect view of the Atlantic Ocean, speaking right next to where you board the ferry to the historically significant Robben Island.

Session: Building AI Solutions in Azure AI Foundry

Following the philosophy “if it’s not broken, it doesn’t need fixing,” I redelivered the same session from Johannesburg. However, this experience was enhanced by:

- More questions and interaction

- Even more engaged audience

- Opportunity to attend colleagues’ sessions

- Better learning experience overall

The session serves as a perfect introduction to Azure AI Foundry and comprehensive AI solution building.

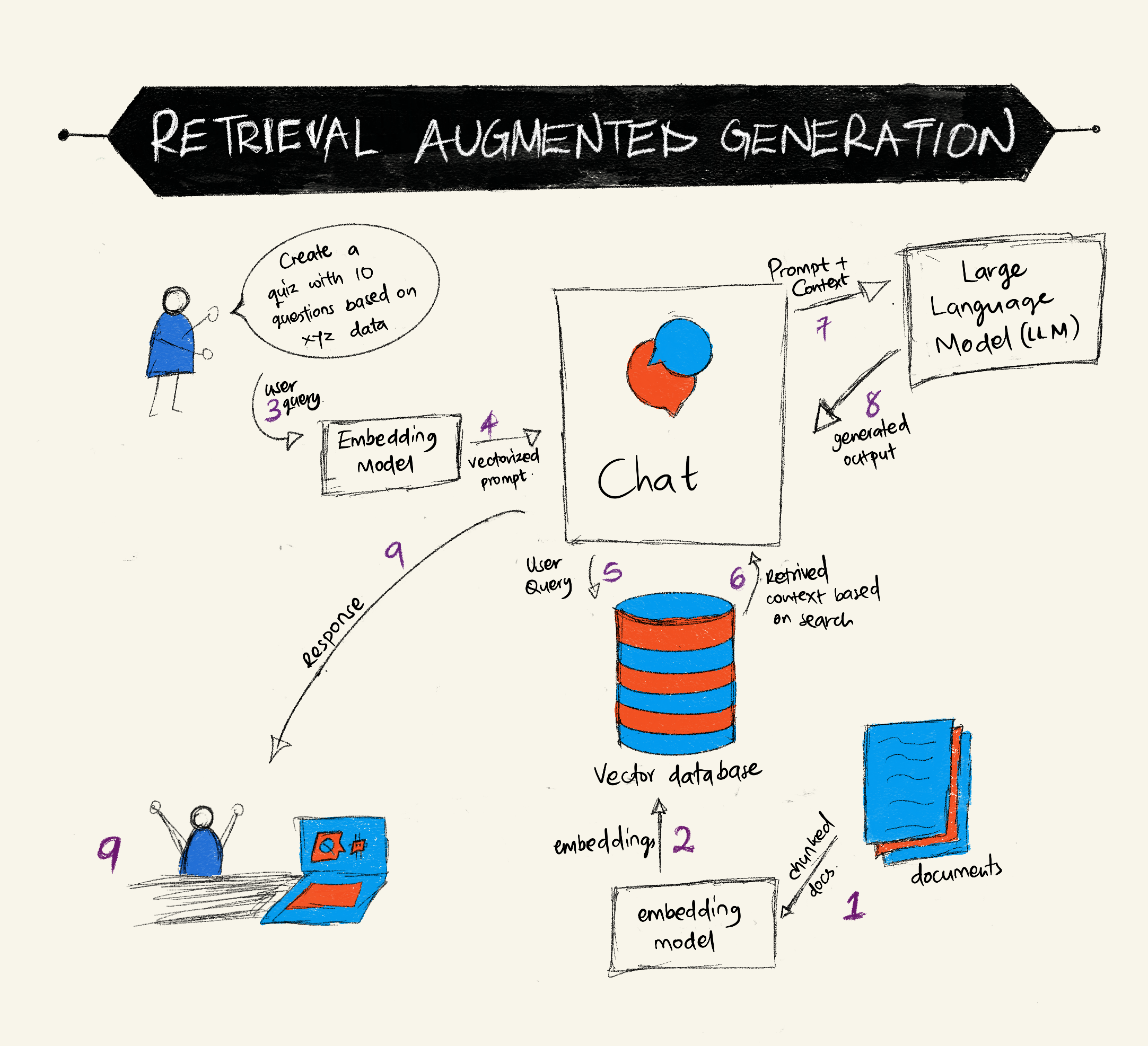

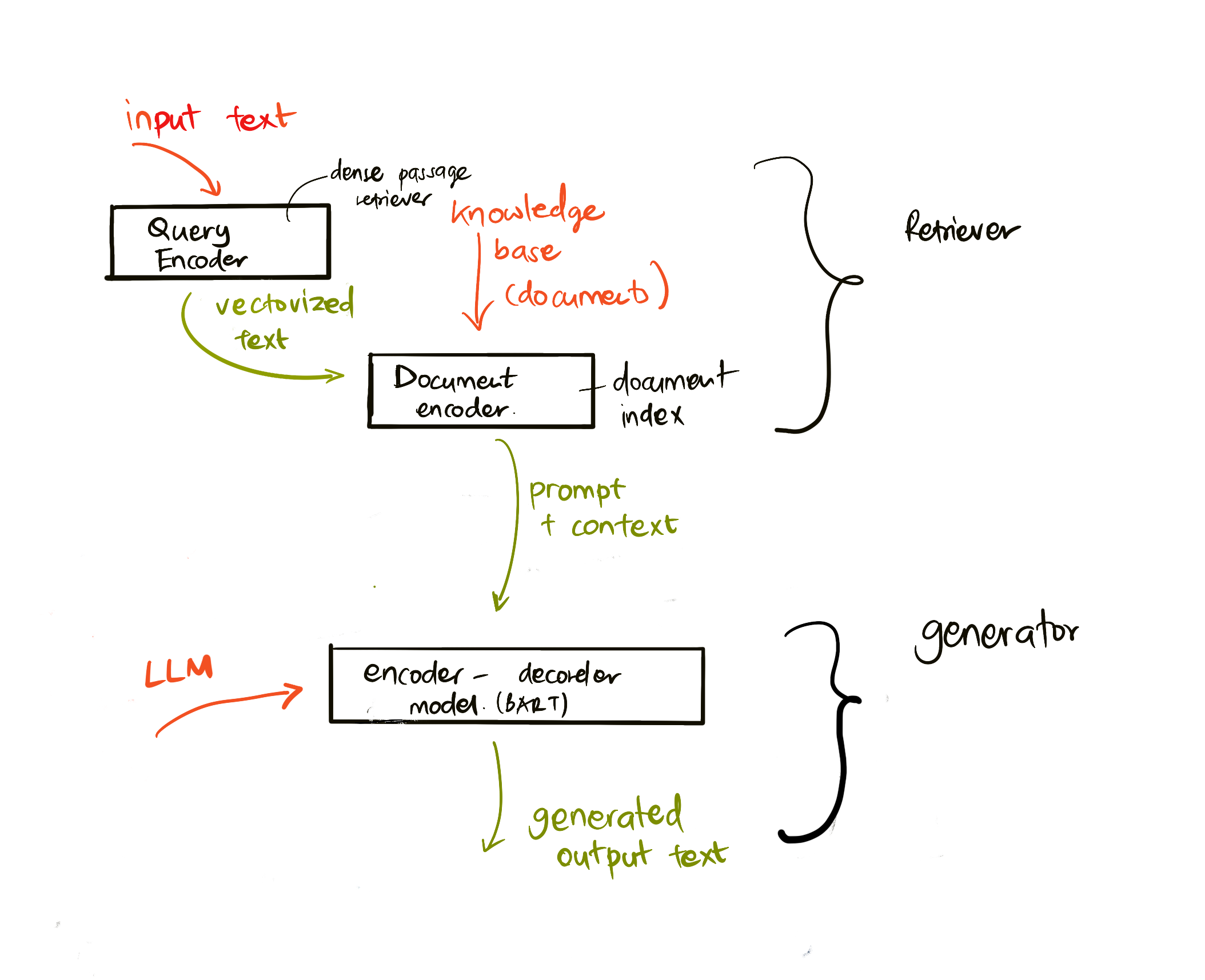

Deep Dive: Retrieval-Augmented Generation (RAG)

Special focus on RAG, a technique that enhances LLM responses by incorporating your own data.

How RAG Works:

- Data Sourcing and Formatting: Collect and store your data in one location.

- Depending on its size, you may need to break it into smaller chunks a process called chunking.

- To make the data understandable for the model, it must be converted into a numerical format, known as vectorization.

- Once vectorized, a search index makes the data easily searchable just like a library catalog.

- Finally, to ensure relevant results, the retrieved data is ranked based on importance.

- Retrieval: When a user asks a question, the app searches the database for relevant information.

- Augmentation: The retrieved data is added to the prompt to enhance the response.

- Generation: The enhanced prompt is fed to the LLM, which generates a more accurate and context-aware response.

Sounds simpler now, right? You can learn more about implementing RAG in our Generative AI for Beginners course.

Resources

💬 Join the conversation! Connect with our community on Discord to continue the discussion, ask questions, and stay updated on the latest in AI and technology.